What is generative AI?

Generative AI is a catch-all phrase for applications that use machine learning and large language models. At first, it started with predicting outcomes based on a large set of data (millions of data points). Recently, it has moved into algorithms trained to create new data.

Markov chain is a simpler statistical model of text prediction. Markov method is used for next-word prediction by looking at a few previous words. Now, these models can not look that far. Hence, they aren’t that good at creating coherent text like ChatGPT.

What has changed now for the recent hype of generative AI?

The technology for such models existed for a few decades now. The only changes are the complexity of these models, architecture, and applicability to real-world scenarios – the distinction here is in terms of the complexity of the generated objects and scalability to solve problems.

Complexity

What is this increased complexity for training models?

Large Language models have brought a shift in recent years. The focus has shifted towards training large complex datasets with millions of parameters rather than building machine learning algorithms trained on a specific dataset.

ChatGPT has trained on an enormous amount of publicly available text. In this massive text corpus, words and sentences appear in sequences with specific dependencies. This recurrence helps the model understand how to cut text into statistical chunks with some predictability. It learns the patterns of these blocks of text and uses this knowledge to propose what might come next.

Architecture

What has changed in the architecture? What has been the evolution of Generative AI?

Generative adversarial network (GAN) is a machine learning architecture proposed by researchers at the University of Montreal. GAN uses two models that work in parallel. One learns to generate a target object (such as an image), and the other learns to decipher and discriminate between actual and generated objects.

Further, Google researchers introduced transformer architecture, which is used to develop large language models (LLM). ChatGPT is powered by Transformer models. In natural language processing, a transformer encodes each word in a text corpus as a token to generate an attention map, which captures their relationship with other tokens. This attention map helps the transformer understand the context when it generates new text.

These listed architectures are just two approaches taken for Generative AI development and are not limited to just these.

What is a Large Language Model (LLM)?

In simple terms, LLMs are programs that generate and recognize text ( human language) after being trained on enormous data sets.

How do LLMs work?

Deep learning is used to understand how characters, words, and sentences function together. Deep learning involves the probabilistic analysis of unstructured data (text), eventually enabling the deep learning model to recognize the context between pieces of content without human intervention.

LLMs are then further trained via tuning: fine-tuned or prompt-tuned to the particular task you want them to do, such as interpreting questions and generating responses or understanding human intent.

Neural Network (NN)

What is a Neural Network?

Neural networks are used to enable deep learning for LLMs. NNs are artificial software programs or codes that teach computers to process data in a way inspired by human brains. Deep Learning uses interconnected nodes or neurons in a layered structure that resembles the human brain. NNs are made of neurons that work together to solve a problem. Neurons are software modules (nodes), and NNs are software programs or algorithms that use computing systems to solve mathematical calculations (summation, exponentiation, etc).

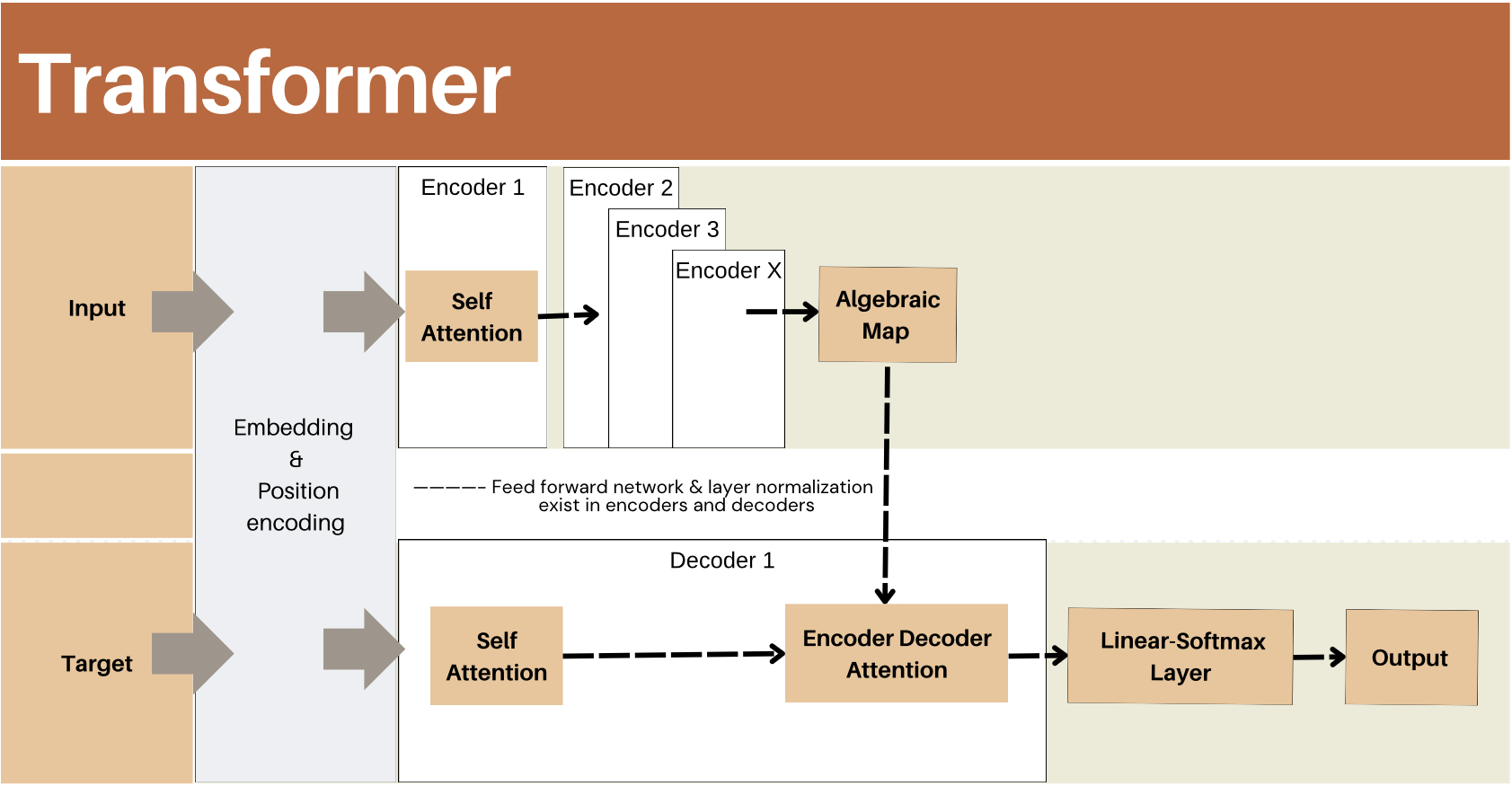

Transformer Model

For LLMs, a specific kind of NN is utilized to understand the context of human language. This neural network applies different mathematical techniques called self-attention to understand the context of human language. The model learns context by tracking the relationship between sequential data (words in a sentence). These techniques even detect the influence of distant data points in a sequence.

The Transformer model was introduced in a Google paper in 2017. Stanford researchers in 2021 called it the foundational model. Applications that use sequential text, images, or videos are perfect candidates for the transformer model, such as translating speech to text in near real-time, speeding up healthcare drug discoveries, trend and anomaly detection, etc. You have used a transformer model when you search on Google or Bing.

How does the transformer model work?

If you remember, we talked about encoders and decoders before. Transformers use strategic positional encoders to tag their sequential data elements. Further, these tags help to calculate an algebraic map to understand the relation between elements. With this strategic addition, computers can understand the same patterns humans see.

What are the different uses of generative AI with neural networks and transformer models?

There are many real-world applications for neural networks.

- Intent recognition

- Financial predictions by using historical data

- Medical image processing

- Energy demand forecasting

- Process controls and automation

- Speech recognition and summarization

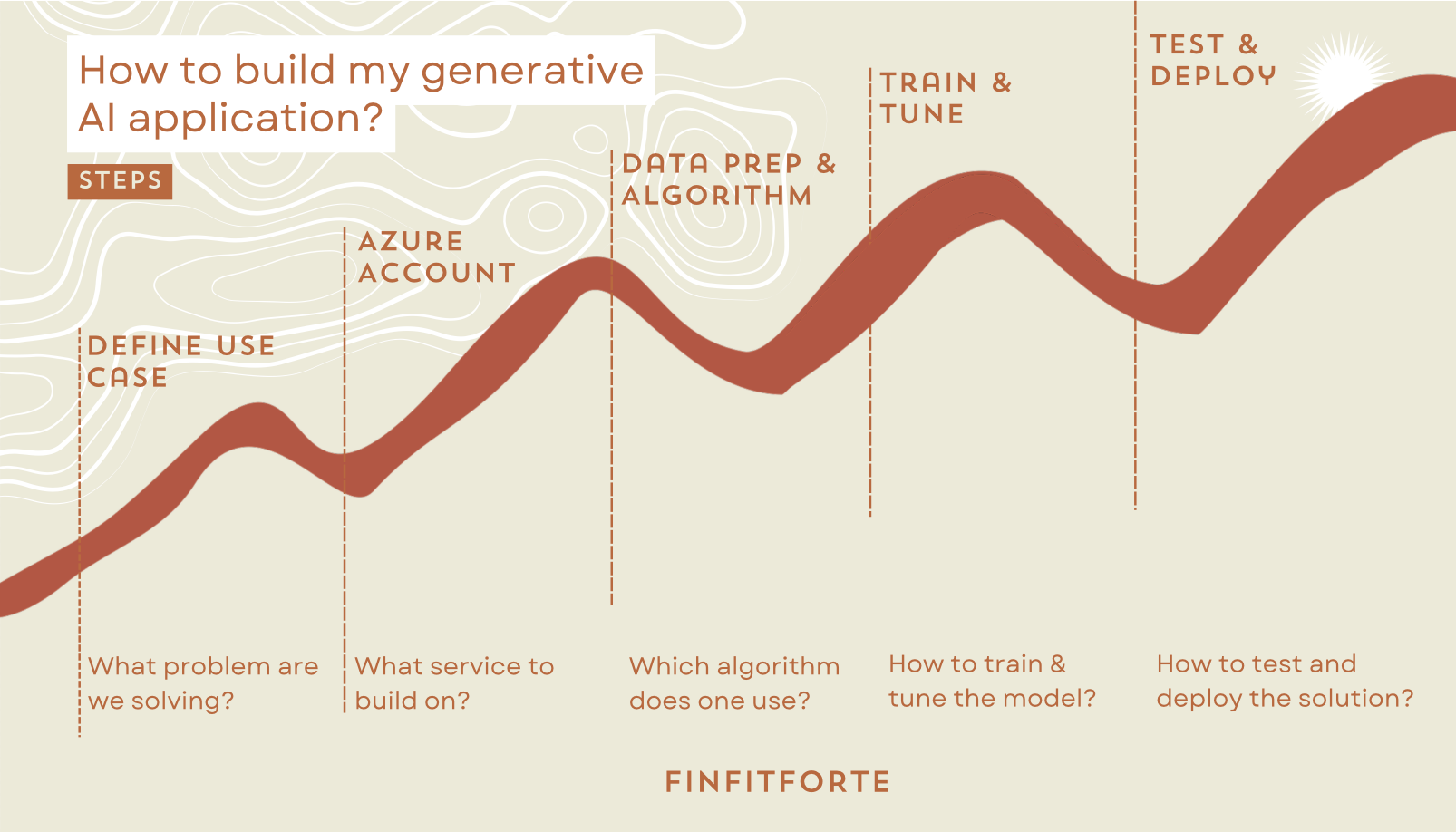

How do I build my first generative AI applications?

You can use multiple Azure services and tools to develop cognitive, intuitive, and intelligent applications. Azure provides various products, such as Azure OpenAI, Azure Machine Learning (ML), Azure Cognitive Services (CX), Azure Databricks (ADI), and Azure GPU-enabled virtual machines.

Use this guide to build your application on Azure.

Define the generative AI use case

What problem are you solving with the AI solution? Define your problem, which needs a creative AI solution, such as a text generator, image synthesis, and intent recognition.

For instance, we want to use our marketing ideas to build content and material for our marketing.

Setup your Azure account

Azure OpenAI provides API access to their powerful LLM models.

First, create an Azure subscription. Second, complete the prerequisites for your access to the API. Third, proceed with building your application and receive outputs. Here, you set up your development environment.

You can build your application to provide marketing stories, social media posts, etc. You can also add enough guard rails around your application to protect it from unauthorized prompting.

Data preparation

Well, data preparation is what builds a robust solution. High-quality data collection that ideally represents the domain and problem. Once collected, it undergoes rigorous cleaning, formatting, and structuring to eliminate errors, inconsistency, and noise.

Choosing GenerativeAI algorithm

An application output mainly depends on the algorithm you select. For example, GANs would be the go-to choice if you aim to generate images. For sequential text, neural networks. To ensure the effectiveness of your application, consider the type of data you generate, whether it’s text, images, videos, etc.

Train and Tune AI model

Training models involve feeding your algorithm with annotated data. The training process is interactive, where your model progressively improves its performance.

Tuning your model involves additional training of a pre-existing model, which has previously acquired patterns and features from an extensive dataset, using a smaller, domain-specific dataset. You can use different techniques, such as Full Fine Tuning, PERT (Parameter Efficient Fine-Tuning)

Test and validate your AI solution and deploy

Align your application to the use case via rigorous testing, such as functional, unit, performance, etc. Validation checks to see if the algorithm meets your intended accuracy and performance.

During deployment, ensure that the solution scales and uses appropriate computational resources. Furthermore, adequately monitor your application for error detection and optimization.

Conclusion

Generative AI has revolutionized how we interact with technology, leveraging advanced models like large language models (LLMs) powered by neural networks and transformer architectures. These models process vast amounts of data to generate human-like text, images, and other content. Building a generative AI application involves several key steps, including defining the problem, selecting the appropriate model, training it on relevant datasets, and fine-tuning its performance through iterative testing. By understanding the underlying mechanisms and methodologies, developers can create innovative applications that harness the power of generative AI to enhance user experiences and drive creativity.